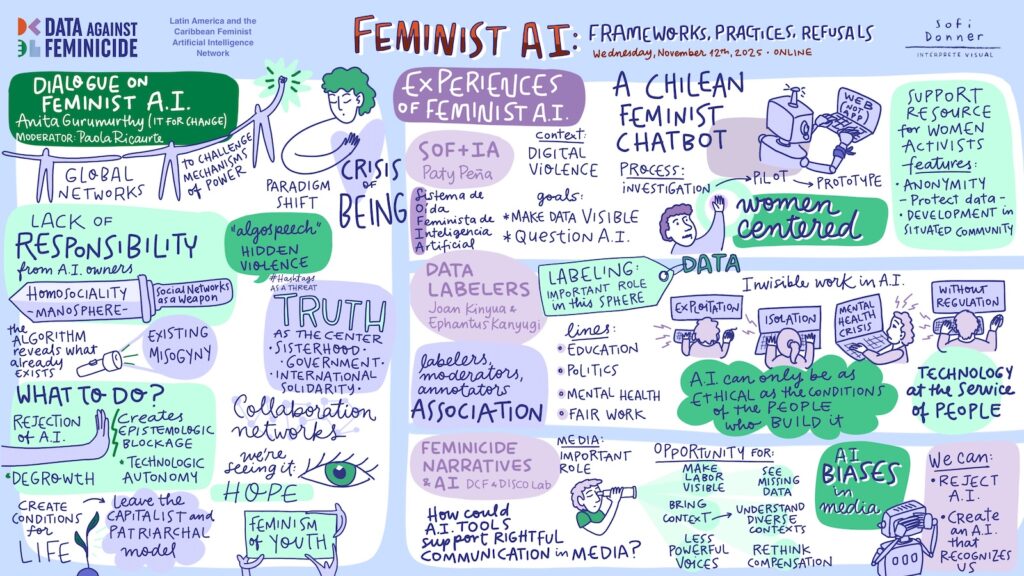

Feminist AI: Frameworks, Practices, Refusals

This annual gathering explored how artificial intelligence (AI) can shape, enhance, or undermine efforts to fight against gender-based violence and feminicide.

Full video

Participants

- Conceptual discussion with Anita Gurumurthy (IT for Change), moderated by Paola Ricaurte: AI, gender-based violence & feminicide: risks, possibilities, feminist approaches and refusal

- Feminist Project spotlights

- SOF+IA (Paty Peña)

- Feminicide Narratives & AI (DCF & DISCO Lab, Brown)

- Data Labelers Association (Joan Kinyua & Ephantus Kanyugi)

Visual summary

Summary

This community event aimed to create a space for dialogue about the development and proliferation of AI technologies and their implications for feminist activism and struggles against gender-related violence.

We addressed the widespread development of AI in extractive and exploitative ways, noting that AI systems are often biased, reproduce inequalities, and carry deep gender, racialized, and colonial biases. The conversation highlighted that digital technology, particularly social media, has been weaponised by institutionalised patriarchies, normalising violence and democratising gender discourse.

One crucial concept explored was AI refusal, situating it within historical feminist knowledge as a way to reject the “poisoned pie” of global AI capital and dismantle structural subordination. True change requires confronting imperialism, rethinking political institutions, and restoring values and value beyond capitalist logics of scale and speed.

The event also featured three project spotlights demonstrating feminist approaches to technology and data work:

- Sophia: A feminist, conversational AI chatbot developed in Chile to tackle digital violence against women. The project focuses on providing anonymity, gathering crucial data (which is often institutionally missing), and building community support.

- The Data Labelers Association: The first registered worker organisation in Kenya advocating for the rights and well-being of data labelers and content moderators—the invisible human labour that powers AI. They address severe exploitation, including massive pay disparities, lack of job security, and the need for mental health support for workers exposed to graphic content.

- Feminicide Narratives & AI: This collaboration uses collective and participatory data annotation to co-design a taxonomy of harmful and constructive journalistic practices regarding feminicide. By centring the expertise of annotators and activists, the project aims to critically engage with data work and remains intentionally open to the possibility of refusing to deploy AI tools in this sensitive domain if deemed undesirable.

Finally, we discussed that while conventional frames like ‘responsible AI’ only correct symptoms, feminist thought and practice are necessary to shift the “ontology of value” and ensure that technology serves humanity, not exploitation.